Frequency Scanning Interferometry (FSI) was invented to measure movements of the detectors in the Large Hadron Collider at CERN. Now a team at the UK’s National Physical Laboratory are developing a practical FSI based instrument for large-scale manufacturing. This may enable the next generation of aircraft with natural laminar flow wings and part-to-part interchangeable assembly. Read the full article, published on SAE.org…

How Gage Studies Can Get it Wrong

A gage study involves real measurements of a calibrated reference, so many people feel it is a completely reliable metric for instrument capability. However, in this article, I use real examples to show how a gage study can get things wrong and how uncertainty evaluation can give a better understanding of variation and bias in a measurement process. Read the full article published on engineering.com…

Unified Uncertainty for Optimal Quality Decisions

I talked about the advantages of a unified uncertainty-based approach to quality in manufacturing earlier this year at LAMDAMAP. I have now gone into more detail on why a hybrid approach is required. In my presentation at ENBIS in Naples, I have given examples of how MSA and SPC can fail to properly quantify uncertainty resulting from systematic effects. Conversely, the approach of MSA and SPC is required to validate the theoretical models used in uncertainty evaluation.

Title: A Unified Uncertainty based Approach for Optimal Quality Decisions

Presented by: J E Muelaner

Conference: European Network for Business and Industrial Statistics 2017. Naples, Italy

Abstract:

There is no unified understanding of uncertainty in industrial processes and measurements, hampering data based decision making. Current methodologies include the Guide to the Expression of Uncertainty in Measurement (GUM), Measurement Systems Analysis (MSA) and Statistical Process Control (SPC). Although differences between methodologies are often simply linguistic in certain key respects there are fundamental differences. None of the methodologies are capable of providing a universal uncertainty framework for both processes and measurements. Additionally, methods currently aim to achieve fixed levels in metrics such as a ‘six-sigma’ process, a Cpk of 1.4 or a ‘Total GRR’ of 20%. With respect to business aims such as profitability, such arbitrary targets are not optimal for all processes. Although aiming for arbitrary targets based on an incomplete understanding of uncertainty has greatly improved quality, an optimized approach based on valid uncertainty statements will bring significantly greater improvements.

This presentation will first show where current methods are equivalent and where they are fundamentally different, leading to the development of a standardized vocabulary. It is then considered where fundamental gaps in each of the methodologies prevents them from providing a universal framework for industrial uncertainty. It is shown that new approaches are required to achieve such a framework. Finally it is demonstrated that a consistent approach to uncertainty, combined with Bayesian statistics, can enable arbitrary targets for process capability to be replaced by optimization of instrument and process selection and the specification of optimal conformance limits.

Unified Uncertainty-based Quality Improvement

Within manufacturing different methodologies are used to quantify uncertainty. These include uncertainty evaluation (GUM), measurement systems analysis (MSA) and statistical process control (SPC). The different methods are used at different stages. GUM is used for instrument calibrations, MSA is used for factory measurements and SPC is used for the production processes. Each is well adapted to the domain within which it is used but fundamentally deals with the same quantities and considerations. Differences in terminology and approach lead to confusion and a lack of traceability as uncertainty is propagated through the manufacturing process. This work, presented at the LAMDAMAP conference, presents an initial framework for a unified uncertainty-based approach. This treats manufacturing processes and factory measurements in the same way as instrument calibrations.

Title: A Unified Approach to Uncertainty for Quality Improvement

Authors: J E Muelaner, M Chappell, and P S Keogh

Conference: Laser Metrology and Machine Performance XII. 2017. Renishaw Innovation Centre, UK

Abstract:

To improve quality, process outputs must be measured. A measurement with no statement of its uncertainty gives no meaningful information. The Guide to the expression of Uncertainty in Measurement (GUM) aims to be a universal framework for uncertainty. However, to date, industry lacks such a common approach. A calibration certificate may state an Uncertainty or a Maximum Permissible Error. A gauge study gives the repeatability and reproducibility. Machines have an accuracy. Processes control aims to remove special cause variation and to monitor common cause variation. There are different names for comparable metrics and different methods to evaluate them. This leads to confusion. Small companies do not necessarily have experts able to implement all methods. This paper considers why multiple methods are currently used. It then gives a common language and approach for the use of uncertainty in all areas of manufacturing quality.

Thermal expansion uncertainty

Thermal expansion is a very important source of uncertainty for many dimensional measurements. Measurements are often corrected for thermal expansion by simply scaling the result. This is valid if we assume a uniform temperature throughout the part. This paper explores the validity of this assumption and shows that in many cases we need to look at this effect more closely. Often thermal gradients cause significant shape changes. These can even be the dominant source of uncertainty for some measurements. Recent work presented at the LAMDAMAP conference shows how these effects can be corrected and their uncertainty evaluated.

Title: Uncertainties in Dimensional Measurements due to Thermal Expansion

Authors: J E Muelaner, D Ross-Pinnock, G Mullineux, and P S Keogh

Conference: Laser Metrology and Machine Performance XII. 2017. Renishaw Innovation Centre, UK

Abstract:

Thermal expansion is a source of uncertainty in dimensional measurements, which is often significant and in some cases dominant. Methods of evaluating and reducing this uncertainty are therefore of fundamental importance to product quality, safety and efficiency in many areas. Existing methods depend on the implicit assumption that thermal expansion is relatively uniform throughout the part and can, therefore, be corrected by scaling the measurement result. The uncertainty of this scale correction is then included in the uncertainty of the dimensional measurement. It is shown here that this assumption is not always valid due to thermal gradients resulting in significant shape changes. In some cases these are the dominant source of dimensional uncertainty. Methods are described to first determine whether shape change is significant. Where shape changes are negligible but thermal expansion remains significant then the established methods may be used. This paper describes the application of the Guide to the expression of Uncertainty in Measurement (GUM) uncertainty framework which provides an approximate solution for thermal expansion due to non-linearity and a non-Gaussian output function. The uncertainty associated with this approximation is rigorously evaluated by comparison with Monte Carlo Simulation over a wide range of parameter values. It is often necessary to estimate the expected uncertainty for a measurement which will be made in the future. It is shown that the current method for this is inadequate and an improved method is given.

Method for traceable coordinate measurements

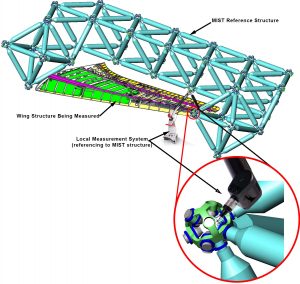

I’ve worked a lot with large-scale coordinate measurements in aircraft and spacecraft assembly. These measurements are typically not fully traceable and their accuracy is limited by environmental effects, especially temperature. For the next generation of aerospace structures, these limitations will need to be overcome to achieve natural laminar flow and part-to-part interchangeability.

We need a measurement system that addresses these issues. I set out to create such a system, working with metrologists at the National Physical Laboratory and Physicists from both the University of Bath and the University of Oxford. This open access paper describes our initial analysis.

Our solution bounces lasers off the surface of steel spheres to directly measure the distance between them. We are able to accurately identify the center of the spheres and their radius is also accurately known. These measurements are combined into a 3D network, with multiple distance measurements from different directions for each sphere. The coordinates of each sphere’s center can then be calculated with direct traceability to the fundamental definition of the metre.

Our analysis shows that this system could deliver the step change in accuracy needed for the next generation of aerospace structures.

Paper title: Absolute Multilateration between Spheres

Authors: J E Muelaner, W. Wadsworth, M. Azini, G. Mullineux, B. Hughes, and A. Reichold

Published in: Measurement Science and Technology, 2017. 28(4)

Abstract:

Environmental effects typically limit the accuracy of large scale coordinate measurements in applications such as aircraft production and particle accelerator alignment. This paper presents an initial design for a novel measurement technique with analysis and simulation showing that that it could overcome the environmental limitations to provide a step change in large scale coordinate measurement accuracy. Referred to as absolute multilateration between spheres (AMS), it involves using absolute distance interferometry to directly measure the distances between pairs of plain steel spheres. A large portion of each sphere remains accessible as a reference datum, while the laser path can be shielded from environmental disturbances. As a single scale bar this can provide accurate scale information to be used for instrument verification or network measurement scaling. Since spheres can be simultaneously measured from multiple directions, it also allows highly accurate multilateration-based coordinate measurements to act as a large scale datum structure for localized measurements, or to be integrated within assembly tooling, coordinate measurement machines or robotic machinery. Analysis and simulation show that AMS can be self-aligned to achieve a theoretical combined standard uncertainty for the independent uncertainties of an individual 1 m scale bar of approximately 0.49 µm. It is also shown that combined with a 1 µm m−1 standard uncertainty in the central reference system this could result in coordinate standard uncertainty magnitudes of 42 µm over a slender 1 m by 20 m network. This would be a sufficient step change in accuracy to enable next generation aerospace structures with natural laminar flow and part-to-part interchangeability.

Calibration of a large scale 3D attitude sensor

Researchers at Tianjin University have developed an attitude sensor, located within the reflector target for a total station. This enables large scale 6 degrees-of-freedom measurements. I provided some support with the calibration procedure, described in this open access paper.

Paper Title: Integrated calibration of a 3D attitude sensor in large-scale metrology

Authors: Yang Gao1, Jiarui Lin1, 2,Linghui Yang1, Jody Muelaner2, Jigui Zhu1, Patrick Keogh2

1 State Key Laboratory of Precision Measuring Technology and Instruments, Tianjin University, Tianjin 300072, People’s Republic of China

2 Department of Mechanical Engineering, University of Bath, Bath BA2 7AY, United Kingdom

Published in: Measurement Science and Technology, 2017. 28

Abstract:

A novel calibration method is presented for a multi-sensor fusion system in large-scale metrology, which improves the calibration efficiency and reliability. The attitude sensor is composed of a pinhole prism, a converging lens, an area-array camera and a biaxial inclinometer. A mathematical model is established to determine its 3D attitude relative to a cooperative total station by using two vector observations from the imaging system and the inclinometer. There are two areas of unknown parameters in the measurement model that should be calibrated: the intrinsic parameters of the imaging model, and the transformation matrix between the camera and the inclinometer. An integrated calibration method using a three-axis rotary table and a total station is proposed. A single mounting position of the attitude sensor on the rotary table is sufficient to solve for all parameters of the measurement model. A correction technique for the reference laser beam of the total station is also presented to remove the need for accurate positioning of the sensor on the rotary table. Experimental verification has proved the practicality and accuracy of this calibration method. Results show that the mean deviations of attitude angles using the proposed method are less than 0.01°.

Methods for thermally compensated measurements of large structures

Thermal deformations are often the dominant source of uncertainty for high accuracy measurements of large structures. This open access paper published in the International Journal of Metrology and Quality Engineering presents a hybrid approach to compensation for the resulting errors. This involves temperature measurements at discrete points on the part which is undergoing thermal deformation. Finite Element Analysis (FEA) is then used to first estimate the full temperature field based on the discrete point measurements of temperature. A second FEA is then used to estimate the thermal strain and predict the nominal state of the part at a uniform reference temperature. Repeating this process at a number of thermal loading states can be used to refine and verify the predictions made.

Paper Title: Thermal compensation for large volume metrology and structures

Authors: Bingru Yang, David Ross-Pinnock, Jody Muelaner, and Glen Mullineux

University of Bath

Published in: International Journal of Metrology and Quality Engineering, 2017, 8(21)

Abstract:

Ideally metrology is undertaken in well-defined ambient conditions. However, in the case of the assembly of large aerospace structures, for example, measurement often takes place in large uncontrolled production environments, and this leads to thermal distortion of the measurand. As a result, forms of thermal (and other) compensation are applied to try to produce what the results would have been under ideal conditions. The accuracy obtained from current metrology now means that traditional compensation schemes are no longer useful. The use of finite element analysis is proposed as an improved means for undertaking thermal compensation. This leads to a “hybrid approach” in which the nominal and measured geometry are handled together. The approach is illustrated with a case study example

A